How To Build An AI Model – A Step-by-Step Guide

- AI/ML

- July 26, 2024

AI model development can lead to crafting intelligent systems capable of learning, reasoning, problem-solving, perception, and language comprehension. But how is such an advanced AI model developed? This guide covers everything you need to know about building, evaluating, and deploying AI models while navigating challenges and exploring future trends.

From stock market trends analysis and prediction to personalized shopping experiences and autonomous driving cars – AI capabilities are flourishing in every industry. This isn’t a distant future—it’s the now story.

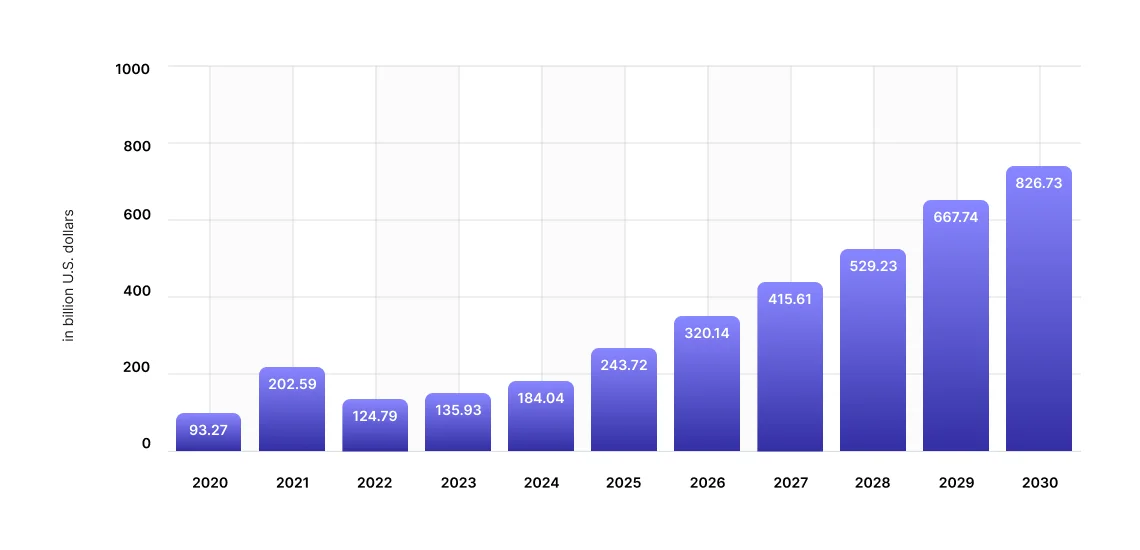

Seeing the evolution of AI with time, you can expect the AI market landscape to grow to $826.73 billion by 2030 at a CAGR of 28.44% from 2024-2030.

From automation and analysis to generative tasks, AI is now capable of many processes. But do you know how it gets all of these capabilities? That’s all thanks to AI models!

However, just getting acquainted with AI tools or using such in everyday business processes is not just a solution. In the case of more customization of AI specific to your business, you also need to invest in custom AI model development.

Before investing in AI model development, it also asks for an understanding of how these powerful AI models are developed, which has become more important.

This blog covers everything you need to know about how AI models are built, the challenges involved, best practices to avoid such, and AI model development cost analysis.

What is an AI Model?

An AI model is a mathematical and computational framework designed to perform tasks that typically require human intelligence. These tasks can include recognizing patterns, making decisions, predicting outcomes, and understanding natural language.

All of these AI applications are created using a combination of algorithms, machine learning models, deep learning techniques, and data, which are used to train the model to perform specific tasks. An advanced AI model is characterized by its ability to learn, reason, adapt, act, and solve the queries prompted by users.

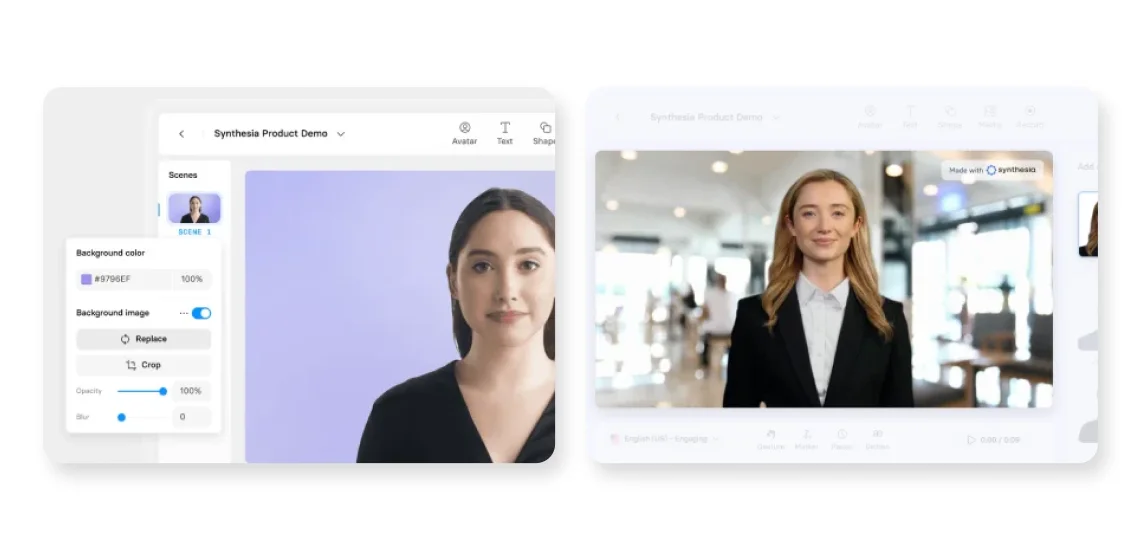

If we take the example of Synthesia.AI, which we have worked on that specializes in video synthesis and creation. It allows users to generate realistic video content featuring human-like avatars that can speak multiple languages, all from text input. This technology uses deep learning and natural language processing to create videos that are highly realistic and customizable.

With our inputs in Synthesia AI model fine-tuning and many other AI system development projects, it marked outcomes like a 50K+ customer base, 70-90% user effort saving, and an increase in 30% of system engagement.

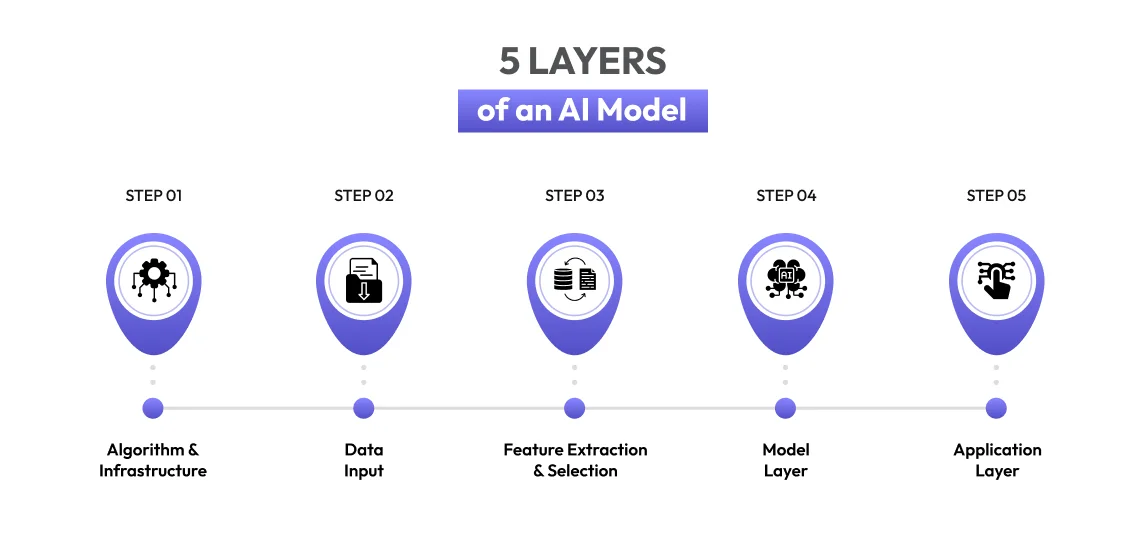

5 Layers of an AI Model

When opting to create AI models, you need to understand each layer with specific functions, from data input and preprocessing to feature extraction and model training. Understanding these five layers of AI models is essential for designing robust models, troubleshooting issues, and optimizing performance.

Algorithm & Infrastructure Layer

This layer encompasses the foundational technologies and infrastructure required to develop, train, and deploy AI models. It includes the computational resources, software frameworks, and hardware necessary to support the entire AI workflow.

GPUs, TPUs, CPUs, and other specialized hardware accelerators are used in the process that speeds up model training and inference.

Machine learning and deep learning libraries such as TensorFlow, PyTorch, scikit-learn, Keras, and more are used to create AI/ML algorithms and a foundational infrastructure layer for the AI model of your solution.

Data Input Layer

This layer is responsible for the acquisition and ingestion of raw data from various sources. It ensures that data is collected efficiently and in a format suitable for processing and analysis.

Here, data sources can be anything, including databases, APIs, IoT devices, web scraping tools, data lakes, and other repositories.

After the data gathering, all are pre-processed using tools like ETL (Extract, Transform, Load) tools, data ingestion frameworks, and streaming data platforms (e.g., Apache Kafka).

The final output of processed data is then stored in databases (SQL, NoSQL), data warehouses, and data lakes where raw data is stored to be used for AI model training purposes. If you’re often dealing with SQL-based data management and ETL (Extract, Transform, Load) processes, consider using an SQL Server ETL to build a strong data pipeline for real-time analytics and reporting.

Feature Extraction and Selection Layer

This layer focuses on transforming raw data into a set of features that can be used to train the AI model. It involves selecting the most relevant features to improve model performance and reduce complexity.

To do that, you’ll have to create new features from raw data through techniques such as normalization, scaling, encoding categorical variables, and creating interaction terms. Further, techniques like Principal Component Analysis (PCA) and t-SNE reduce the number of features while preserving important information.

For the final feature selection, methods like statistical tests, correlation analysis, and machine learning algorithms are used to select the most relevant features.

Model Layer

This is the core layer where the actual AI model is defined, trained, and validated. It includes the algorithms and architecture that process input features to make predictions.

For the creation of AI model architecture, Neural networks (CNNs, RNNs, transformers), decision trees, ensemble methods, support vector machines, etc., are used.

Hire AI developers from our team who use model optimization techniques like gradient descent, Adam, loss function, and evaluation metrics to train the AI/ML algorithms.

With AI model training, it’s also required to have them generate the output as expected, and for that, hyperparameter tuning is done. Grid search, random search, and Bayesian optimization are used to find the optimal hyperparameters that improve model performance.

Before putting the AI model into the application layer, you also need to validate it, for which validation techniques like cross-validation, hold-out validation, and metrics are used to check AI model accuracy, precision, recall, and F1 score.

Here, F1 score stands for a measure that evaluates the accuracy of a machine learning model.

Application Layer

This layer deals with the deployment and integration of the trained AI model into real-world applications. It ensures that the model can be accessed and used effectively by end-users or other systems.

In the application, API development, user interface design, feature & 3rd party API integration, and AI model management (through monitoring and maintenance) are covered to form a full-fledged AI application that users find helpful.

Importance of AI Models In A Software Ecosystem

AI models play a crucial role in a software ecosystem by bringing advanced capabilities and intelligence to various aspects of applications and systems. Some of the key reasons to have an AI-powered software in place include:

Enhanced Decision Making with Predictive Capabilities

AI models can analyze large volumes of data quickly and accurately, enabling better decision-making processes. Based on that, they offer current-time insights as well as predictive data (forecasting future outcomes based on overall data).

They can uncover patterns, trends, and insights that may not be possible through traditional methods, thereby aiding in strategic planning and operational efficiency.

Considering this capability, AI models are highly recommended for use in software systems that include sales management, AI inventory management, risk assessment, and demand prediction. Just like how our project Sidepocket – an AI-powered robo-advising app helps investors with market risk assessment and offering investment tips.

Automation of Tasks with Enhanced Efficiency and Accuracy

AI models can automate repetitive and mundane tasks, freeing up human resources for more complex and creative endeavors. With rule-based automation, AI models are also trained to perform tasks with a high degree of accuracy and consistency, reducing errors and improving overall system efficiency.

This is particularly beneficial in tasks requiring complex computations or processing vast amounts of data. Moreover, this can lead to increased productivity and cost savings within the software ecosystem.

Personalization and Customer Experience

AI models can personalize user experiences by analyzing user behavior and preferences. This allows software applications to tailor content, recommendations, and interactions to individual users, enhancing customer satisfaction and engagement.

Popular apps like Amazon, Netflix, Spotify, Instagram, YouTube, Google News, and many others offer personalized customer/user experiences that make their users spend more time on their platform, leading to better business opportunities.

Competitive Advantages

Creating AI models and integrating such into your software ecosystem also tend to give a competitive edge by enabling faster innovation, better customer insights, and operational efficiencies. Organizations that leverage AI effectively are often better positioned to respond to market changes and customer demands.

There’s a reason behind it! Modern customers want everything to be personalized and curated as per their preferences, and when they get it, they tend to rely on such platforms.

Hence, software with AI capabilities often wins the trust of today’s tech-savvy users.

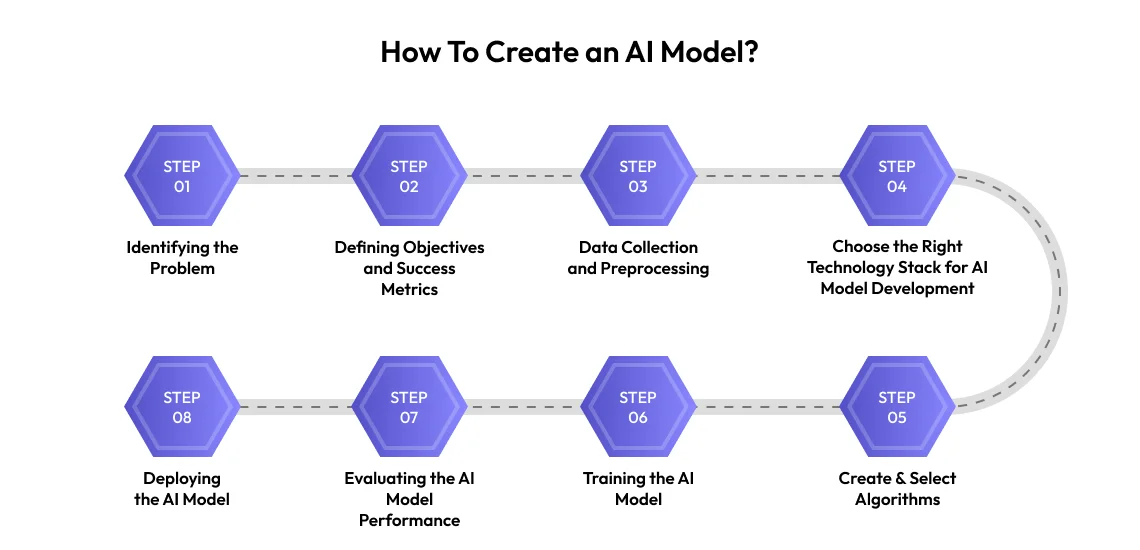

How To Create an AI Model?

Creating AI models involve a structured approach that combines domain expertise, data science, and machine learning techniques. Below, we outline the essential steps and provide detailed descriptions to guide you through the process of successfully building AI models:

STEP 1: Identifying the Problem

The first step in creating an AI model is to clearly define the problem you want to solve. This involves understanding the specific challenge, the context in which the AI will be applied, and the desired outcomes.

A well-defined problem statement ensures that the project has a focused direction and measurable objectives. In this step, having back support from expert AI developers will help a lot.

STEP 2: Defining Objectives and Success Metrics

Once the problem is identified, it’s crucial to establish clear objectives and success metrics. Objectives outline what the AI model aims to achieve, while success metrics provide quantifiable criteria to evaluate the model’s performance in terms of accuracy, precision, and processing speed.

Your AI model may aim in terms of classification, prediction, generation, reinforcement learning, anomaly detection, recommendation, etc. Based on specific objectives for the AI models, success metrics should be defined.

For example, if running an e-commerce store like Amazon, you’d want your AI model to focus on personalization and suggest users the products they might be interested in buying. If the platform is achieving that relevancy and sales boost, then consider that the AI model is doing the part with success metrics.

STEP 3: Data Collection and Preprocessing

Data is the foundation of any AI model. The more relevant it is with the objectives and success metrics, the better results the AI model can offer if trained upon that.

Hence, our expert data scientists for hire often follow this practice of cleaning the data to remove inconsistencies. They handle missing values, normalize data scales, and perform feature extraction to create datasets relevant to the problem domain. So, they can train the AI models to generate the desired quality of output.

STEP 4: Choose the Right Technology Stack for AI Model Development

When planning to build AI models for your super-business-reliant software solution, it’s required to choose the technology stack carefully. The technology stack is an asset that gives developers the ease to develop complex AI models that can meet your success metrics.

So, to create AI models for your business-centric software development project, you should consider the following modern-day technology stack. Our AI and ML developers also follow the same to work on AI/ML projects:

| Languages | Python, JavaScript, TypeScript |

| Machine Learning and Deep Learning Frameworks | Scikit-learn, PyTorch, Tensorflow, Keras, Xgboost, Caffe, MxNet, AutoML, CNTK |

| Data Processing and Visualization | Pandas, Numpy, Matplotlib, Seaborn, Plotly, PowerBI, Tableau |

| Natural Language Processing Technologies | NLTK, Spacy, HuggingFace, Transformers Library |

| Data Management | OpenML, ImgLab, OpenCV, Fivetran, Talend, Singer, Databricks, Snowflake, Pandas, Spark, Tecton, Feast, DVC, Pachyderm, Grafana, Censius, Fiddler |

| Neural Networks | Convolutional and Recurrent Neural Networks (LSTM, GRU, etc.), Autoencoders (VAE, DAE, SAE, etc.), Generative Adversarial Networks (GANs), Deep Q-Network (DQN), Feedforward Neural Network, Radial Basis Function Network, Modular Neural Network |

| GenAI Tools | LangChain, LlamaIndex, HuggingFace, OpenAI GPT 3.5/4, Llama, Mistral, Mixtral, TinyLlama, Google Gemma, Google Gemini, Llava, BERT, PaLM2, GPT4All Models, HuggingFace Models, bloom-560m, DALL.E, Whispers, Stable Diffusion, Phi, Vicuna |

| Cloud | Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP) |

STEP 5: Create & Select Algorithms

After the selection of the technology stack, all you need to think about is creating or selecting the right algorithm for your AI model that suits the selected problem type and data characteristics.

For example, you can use:

- Regression & Predictive algorithms for predicting continuous values

- Classification algorithms for categorical outcomes

- Clustering algorithms for grouping data with similar characteristics

- Decision tree algorithms to train the AI model and make rule-based decisions

- CNN-based algorithms to perform tasks associated with images

- RNN-based algorithms to perform tasks associated with sequential data

To identify the best-performing algorithm, you can hire ML developers to experiment with multiple approaches and bring fresh perspectives to your existing processes.

STEP 6: Training the AI Model

Training the model involves feeding the training data into the selected algorithm and allowing it to learn patterns and relationships within the data.

This process typically involves optimizing the model parameters to minimize error and improve predictive accuracy. Techniques like cross-validation can be used to ensure robust training.

In machine learning the term “learning” is achieved by using sample datasets to train models. These data sets are designed using probabilistic trends and correlations to achieve the success metrics of the AI model.

When it comes to using supervised and semi-supervised machine learning, the data scientists onboard should carefully label the data for better results. The better the feature extraction the lower data needed for the training purpose for supervised learning as compared to unsupervised learning.

When training the models, it’s a must to ensure that they are trained upon real-world data that reflect the work they are supposed to do.

Along with datasets, data scientists also have to take care of the parameters a model has. The more parameters there are, the more complex it can become to train it.

This scenario actually was introduced at the time of training LLMs for both Open-AI’s GPT-3 and BLOOM with 175 billion parameters.

You cannot always rely on public data as such can raise regulatory issues. So, the relevancy is much. After all, you can’t rely on the model trained up on social media threads to be fit for enterprise use.

To avoid such consequences along with biases and unique causes, you can always find synthetic data generation as your gateway to avoid overfitting and underfitting troubles with data.

STEP 7: Evaluating the AI Model Performance

It is necessary to evaluate the performance of your AI model to check whether the model is well-trained or not. The results further help to optimize it to achieve success metrics in terms of accuracy, speed, and many others.

That can be done by considering the following factors:

Data Splitting

For this, data is categorized into three different sets for specific tasks:

- The training set for the AI model training

- The validation set is for hyperparameter tuning and model validation

- The test set is to evaluate the final performance of the AI model.

Performance Metrics

Choosing appropriate metrics based on the type of problem is essential:

- Classification: Accuracy, Precision, Recall, F1 Score, ROC-AUC.

- Regression: Mean Absolute Error (MAE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), R-squared.

Cross-Validation

In cross-validation, either training data are kept aside or repurposed for the control group creation. It can be done by using non-exhaustive methods like k-fold, holdout, and Monte Carlo cross-validation or exhaustive methods like leave-p-out cross-validation.

From all the mentioned methods, k-fold cross-validation is used widely to ensure that the model performs well across different subsets of the data. This helps to evaluate model performance robustly by averaging the results achieved through multiple training and validation cycles.

Hyperparameter Tuning

Hyperparameters are settings that influence the training process and model performance. Hyperparameter tuning involves systematically adjusting these settings to find the optimal configuration. This can be done by techniques like grid search, random search, and Bayesian optimization.

Error Analysis

Tools like confusion matrices (for classification) and residual plots (for regression) are helpful in this step.

Robustness Testing

It helps to evaluate how the model performs under different conditions and with various types of data:

- Adversarial Testing to check model robustness by infusing small disturbances of motion.

- Stress Testing is done by infusing extreme values and edge cases.

- Out-of-sample Testing helps to evaluate the model on data from different distributions than the training data.

By following these steps, you can thoroughly test your AI model to ensure it is robust, reliable, and performs well in real-world scenarios.

By following these steps and many others, the AI model is evaluated on the basis of robustness, reliability, and performance in real-world scenarios.

STEP 8: Deploying the AI Model

Deploying the AI model involves integrating it into a production environment where it can be used to make real-time predictions or decisions. This requires setting up a computing infrastructure for model hosting, ensuring scalability to handle large volumes of data, implementing security measures, and establishing monitoring systems to track model performance and accuracy over time.

Challenges Can Be Faced During AI Model Development

With focusing on greater results, challenges will also come your way. As AI offers automation of workloads, personalization, and many other compelling perks, to avail such, developers also have to deal with many challenges that range from data quality to ethical considerations. Let’s explore some of the challenges you can expect at the time of building an AI model:

Data Quality and Quantity Issues

AI models require high-quality data at scale to get trained on effectively. However, in order to give that the one, there might be the following challenges in a way to big data management:

- Incomplete data with missing values can affect the accuracy of the AI model.

- Noisy data – the one which is irrelevant or incorrect with the tendency to mislead the learning process or create hallucinations for the AI models

- Imbalanced data can result in biased AI models that perform poorly on minority classes.

- Limited data availability can restrict the model’s ability to generalize well to new, unseen data.

Bias and Fairness in AI Models

Consider AI as a kid learning new languages, computation techniques, and more. Whatever you educate the kid with the study samples, the learning process will be carried out that way.

So, when training AI, you have to be careful of the data you’re feeding it to not make it biased present in the training data.

If that happens, the AI model can lose its trustworthiness, resulting in your product abandonment. So, the following challenges related to AI model bias and fairness, you can expect:

- The historical bias that stands for bias resides in the historical data.

- Sampling bias occurs when non-representative samples take place in the training data, resulting in biased predictions.

- Algorithmic bias can arise at the time of designing the algorithms.

Scalability and Performance

AI models, just by hearing this, we feel how complex its development can be. As it sounds, AI models are actually complex from designing and development to maintenance.

In the digital world, any product can receive high demand and traffic at any time, and to deal with that, you have to make AI models that can scale efficiently and maintain performance, which is crucial for real-world applications. But to make this complex AI model scalable, you can face the following challenges:

- Arranging and dealing with significant computational power and memory that AI models would need to run smoothly.

- Programming AI models with equations that ensure quick replies for real-time applications.

- Creating robust data strategies to process and deal with data at scale.

- Using technologies like Cloud & DevOps helps to make the AI models scale up and down as per the process requests in a production environment.

Ethical and Legal Implications

AI models deal with data, learn from it, and keep data at the core of it. And where there is data, there will always be privacy and ethical concerns associated. Hence, you have to develop an AI model considering the ethical and legal responsibilities. To do so, you can expect to face certain challenges like:

- Ensuring that the data used does not violate user privacy or data protection regulations.

- Making AI models interpretable and transparent to stakeholders.

- Defining the accountability for the outcomes produced by AI models.

- Sometimes, even adhering to laws and regulations governing the use of AI in different domains.

You can avoid all these AI model development related challenges if you have assistance from the expert team of AI/ML development services providers with a proven track record.

Best Practices for AI Model Development

You can also avoid the above-mentioned challenges associated with making AI models by following the AI development best practices, which include:

- Clearly outline the AI model objectives and success metrics to build it to offer outputs as you’ve planned.

- Ensure the data you collect for the AI model development project is accurate, complete, and resembles real-life application usage.

- A practice of regularly updating and data cleaning is required to maintain its quality.

- Analyze data for potential biases and address them proactively by implementing fairness metrics to treat all groups fairly.

- Use robust data processing techniques to handle missing values and normalize, clean, and transform data to make it suitable for model training.

- Choose algorithms that are well-suited to the specific problem and data characteristics by experimenting with the AI models.

- Employ cross-validation techniques like k-fold to assess the AI model performance reliably.

- Use techniques like SHAP or LIME for model explanation and make it interpretable.

- Use techniques like grid search, random search, or Bayesian optimization to improve model accuracy and performance.

- Ensure the model adheres to ethical guidelines and legal regulations for data privacy, transparency, and accountability.

- Plan the AI model deployment for better scalability, latency, and resource management.

How Much Does it Cost To Create an AI Model?

You can expect the cost of AI model development to range from $10,000 – $300,000 or more millions of dollars. However, it can vary based on factors like the complexity of the model, development approach, data needs, talents and expertise, location of the talents, time taken and asked, and many others.

If you’re planning to build simple AI-powered chatbots like Replika or basic machine learning models, such can cost thousands of dollars. More advanced systems, on the other hand, like computer vision solutions, natural language processing solutions, or generative AI software development, relying on big data and deep learning can cost millions.

Moreover, your project type, if required to build an AI model from scratch – requires a lot more time and money than using pre-existing solutions or cloud-based platforms.

In the case of running on a tight budget, you can always consider using pre-trained models, open-source tools, and cloud-based platforms that offer AI services.

For further clarity on the cost of creating an AI model, you can talk to an AI development company. Then, they can assess your specific needs and give you a more accurate estimate.

How MindInventory Can Help You With AI Model Development

Be it developing AI models for enterprises or rising startup products, attention to strategic development planning and meticulous execution should always be there. Missing to do so can often lead to slow failure of the AI model because of its biased outcomes, not meeting the legal and ethical considerations for data, and more.

Not just that, you also have to optimize your AI model to stay relevant to the growing user demands and AI technology trends. Dealing with all AI matters can be difficult all alone without having assistance from AI experts.

That’s where MindInventory comes in as your ideal AI/ML development company. Our AI experts can help you with well-planned AI model development with a strategic approach and considering all contingencies. Schedule a call with our experts to discuss your AI project and get a development plan with cost estimation that benefits your business.

FAQs on AI Model Development

The future of AI model development is poised to be transformative, driven by advancements and innovations that will redefine how we create and deploy intelligent systems. In the future, you can expect AI to take the shape of explainable AI, Federated Learning AI models, Real-Time Intelligence, Bias-Free AI, and Hybrid AI Models, bringing the best of both worlds and more to the advanced level across industries.

A simple AI project with a small dataset may take 3-6 months from start to finish. More complex projects with large datasets, advanced techniques like deep learning, and extensive experimentation may take 6-12 months or longer.

To build a generative AI model, you can start by determining the objective and gathering high-quality datasets relevant to your tasks at scale. Next, select an appropriate architecture from options like GANs, VAEs, or GPT. After this, proceed with building, training, and tuning the model parameters. Optimize for better accuracy and speed to ensure the generative AI model performs well.

AI significantly impacts enterprises by automating tasks, enhancing decision-making, personalizing customer experiences, driving innovation, improving security, and reducing costs. It also boosts scalability, provides competitive advantages, increases employee productivity, and optimizes supply chain management. Overall, AI transforms business operations and interactions, making enterprises more efficient and agile.

With the data intensiveness, the security of AI systems can be prone to threats like data breaches, adversarial attacks, and unauthorized access – if not developed considering all loopholes.

You can secure AI models by ensuring robust data encryption, regular security audits, adversarial training to detect and counteract malicious inputs, and compliance with security standards.

When implementing AI models, you can consider factors like data quality, algorithm selection, scalability, integration, ethics and biases, performance monitoring, and compliance.